“I like my coffee like I like my war. Cold.”

A couple of years after a pair of scientists at the University of Washington wrote a program that correctly added “that’s what she said” at the end of a sentence 72% of the time, researchers at the University of Edinburgh decided to give it a go. They trained a model on large amounts of language data to create jokes following the “I like my X like I like my Y, Z” structure, producing jokes like the one above, or the far less funny “I like my women like I like my camera … ready to flash.”

Sure, it looks like a joke, and sounds like a joke, but many argue that it lacks the fundamental part when it comes to comedy — it just isn’t funny.

It turns out that while computers are infinitely better than us at many a task, they just aren’t great at cracking jokes. But that hasn’t stopped researchers from building comedy-generating algorithms. And despite how enjoyable it must be to watch a machine struggle to come up with a decent joke, the reason why so many academics and scientists are exploring the fascinating world of computational humor isn’t purely whimsical.

Developers, scientists, product managers and academics are all working towards making human-machine interactions as natural and personal as a conversation between two friends, and for that, they need to tackle Natural Language Processing, teaching computers to process, analyze, and replicate the structures of our language. And that’s not an easy task. In fact, as a manual of Mathematical Linguistics points out, “these tasks are so hard that Turing could rightly make fluent conversation in natural language the centerpiece of his test for intelligence.”

Though you wouldn’t necessarily say it after a string of “funniest home videos” on Youtube, humor is one of the most sophisticated forms of human intelligence — scatological and all. That’s partly because humorous language typically uses complex, ambiguous and incongruous expressions which require deep semantic interpretation.

And it’s why research into deep modelling of humor is lacking — it’s too complex, or what researchers call it, AI-complete — a category reserved for the hardest AI issues, in which solving the particular computational problem is as hard as solving the central artificial intelligence question.

Finding the joke

We’ve been trying to crack a formula that can explain humor for thousands of years, from Aristotle to Freud, Kierkegaard to Monty Python. The first theory of humor (or rather, the first we know of) dates back to Ancient Greece. Known as the Superiority Theory, it poses that humor comes from others’ misfortunes. While it’s a perfect explanation as to why we blurt a chuckle when someone slips on the sidewalk, it explains little else. Much later, in the early 20th century, Freud comes up with the Relief Theory. He claims humor is a release of our accumulated inner desires, that it happens when the conscious allows the expressions of thoughts that were usually forbidden. Great for dirty, sarcastic or hostile humor, but still, not all jokes fall under this category.

Then, in the 70s, linguists rallied behind the Incongruity Theory: the idea sported by philosophers Kant and Schopenhauer that we laugh at violations of our expectations. A joke is therefore a two-part enterprise — it requires a set-up, a creation of expectations, and a punch-line, when that expectation is subverted.

This theory was one of the first that Diyi Yang, an assistant professor at the School of Interactive Computing at Georgia Tech, came across. Back in 2015, when she was at Carnegie Mellon, Yang used to wonder about humor. Not that she cares much for jokes: “I’m not a humorous person, but I like humor.” Humor is a crucial part of understanding human communication. “I think that if our computers can understand humor, they can better understand the true meaning of human language,” she said. “If you think about all those conversational agents like Google Assistant, Alexa, or Siri, if they had a better understanding of humor, they could make better decisions to improve the user experience.”

Humor could help her build intelligent systems that can provide natural, empathic human-computer interactions, so she conducted an independent research project to try and create computational models to discover the structures behind humor, recognize it, and even identify which words prompt humor in a sentence.

Yang dove deep into the linguist theories of humor, identifying several semantic structures for each that she could use to train the models with. One of them was the theory of incongruity — which explains why we find pictures of monkeys wearing suits and business cases hilarious, or, to cite another monkey-themed quip: “Why did the monkey fall out of the tree? Because it was dead.”

Linguistic theories of humor

In her research, Yang studied several latent structures behind humour present not just in the incongruity theory, but also in three others:

- Ambiguity. Humor and ambiguity often come together when a listener expects one meaning, but it forced to use another.

Did you hear about the guy whose whole left side was cut off? He’s all right now.

- Phonetic style. Some linguistic studies show that the phonetic properties of jokes — alliteration, word repetition, rhyme — can be as important, if not more, than the content itself. Many one-liners have a certain comic effect even when the joke isn’t necessarily funny. For example:

When you’ve seen one shopping centre, you’ve seen a mall.

- Interpersonal effect — this theory explains that humor is essentially associated with sentiment and subjectivity, especially in contexts ridden with hostility. That’s why sentences like the one below are funny(ish), though you could argue there’s barely any sophistication there.

Your village called. They want their idiot back.

To perform automatic recognition of humor and humor anchor extraction — words like “Knock, knock” which give clues toward a humorous interaction — Yang needed a data set with both humorous and non-humorous examples. “It wasn’t easy to do the research,” Yang said. “It was a relatively under-investigated topic, we spent a lot of time getting the data.” She used Pun of the Day — the largest collection of humorous puns on the internet— and the 16000 one-liner data-set. As a control group, she used headlines from AP News, The New York Times, Yahoo! Answer and Proverb.

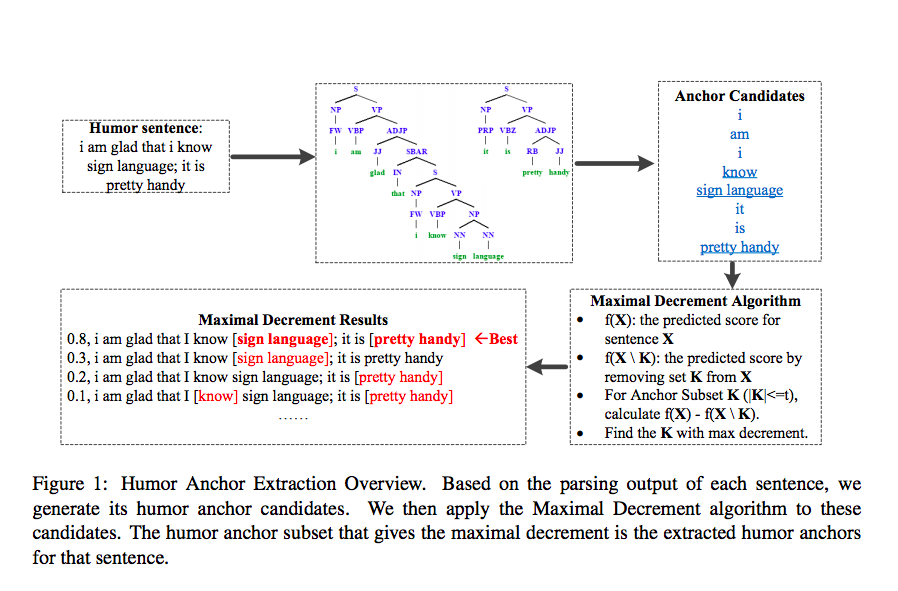

To identify the anchors — the words that set up the joke — Yang and her colleagues parsed each one-liner that satisfied the criteria for one of the humorous structures by. Consider the following gag:

I am glad that I know sign language; it is pretty handy.

The human anchors aren’t the words like “know”, or “am”, and they’re not the pairs “know” and “pretty handy”. Rather, it’s the combination of “sign language” and “pretty handy” that enables the joke — no matter how dry it is. Each of the anchor candidates gets assigned a predicted humor score, which is then computed by a humor recognition classifier trained on all data points.

The humor anchor candidates that provide the biggest score are then returned as the anchor set. The results were promising — they used other methods of humor recognition, such as Bag of Words, Language Model, and Word2Vec, as baselines, and achieved better results.

But there’s a lot of work to be done, still. Especially since humor is not just about the words.

All about T * I * M * I * N * G *

There’s an old joke that goes something like this:

“Ask me the secret of good comedy.”

“What’s the sec—”

“Timing!”

We’ve all seen the same joke land beautifully when certain comedians say it, only to fall flat as you blurt it in a misguided attempt to be funny at a family gathering. But, as Rhodri Marsden once pointed out, “that’s down to a combination of reputation, momentum, presence and timing.” For obvious reasons, training models to recognize timing isn’t as easy as asking them to detect a simple “that’s what she said” at the end of any given sentence.

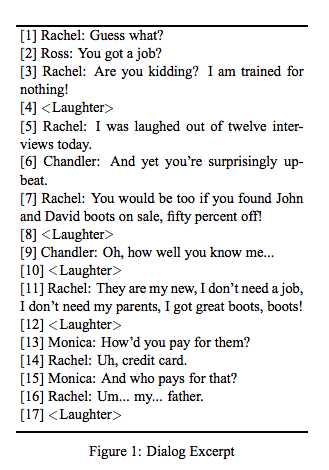

But still, there are some interesting studies on it. Amruta Purandare and Diane Litman from the Intelligent Systems Program at the University of Pittsburgh have analyzed 2 hours of audio, from a total of 75 scenes from six different Friends episodes, marking each speaker turn which is followed by a laugh track.

They examined certain acoustic and linguistic features, such as tempo, pitch, number of words and how often certain words are repeated. Their analysis confirmed something we’ve known all along — there are significant differences in those prosodic characteristics of humorous and non-humorous speech, consistent across different genders and speakers. In funnier interactions, speakers tend to have a higher tempo, pitch, and energy, which is consistent to previous research that shows that these features are associated with positive emotional states such as confident, which are more likely to appear in humorous communications.

It also showed that, unsurprisingly, Chandler has the funnier interactions on those 75 scenes (22.8% of all jokes are his), and that laugh tracks — whose popularity has thankfully declined ever since the 80s and 90s — are actually good for something.

Research in computational humor is still in the very early stages, but computers seem to be getting better and better at it. There’s still a long road ahead, but fortunately, that road is paved with bad puns and cheesy one-liners. And while the jury is still out on whether “I like my coffee like I like my war. Cold.” is funny or not, I, for one, find it hilarious. I’m a woman of simple tastes. If it looks like a joke, and sounds like a joke, it probably is one.

The post Getting serious about humor: Can AI understand jokes? appeared first on Unbabel.